Open Telemetry Best Practices

NCCL and AI workloads operate in environments where every millisecond counts. High frequency telemetry in the datacenter fabric is critical for continuation of the service, and meeting SLAs.

AI and NCCL workloads rely on highly synchronized, low-latency communication between multiple GPUs and nodes. Even slight network delays or congestion can bottleneck training or inference; therefore, constant, granular monitoring helps detect and address issues before they impact performance. With frequent telemetry, AI fabric operators can spot performance degradations, packet loss, or hardware faults in near real time. This immediate visibility allows for proactive troubleshooting and dynamic adjustments, keeping distributed operations smooth.

As AI workloads scale across many nodes, any inefficiency in the network fabric can lead to significant cumulative delays. Telemetry at high frequency ensures that these scaling challenges are met with data-driven optimizations, sustaining overall system efficiency.

Telemetry requires correct planning and dimensioning for implementation. You need to know how to design and implement your telemetry environment keeping the scaling of your cluster in consideration.

This knowledge base article outlines best practices for collecting and managing telemetry data from NVIDIA switches using OTLP. These best practices are based on the experience of various customers and ensure efficient data collection, optimized data transfer, and scalable TSDB storage.

NVIDIA only provides best practices as seen by customer clusters; each component has its own satellites, configurations, and maintenance expertise.

Telemetry Components

The Collector

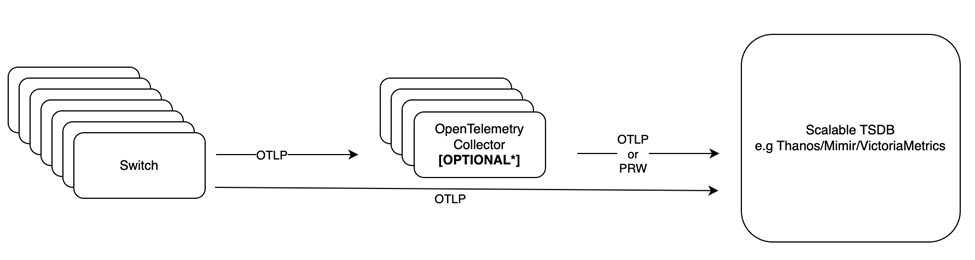

Use a collector if the TSDB does not support OTLP ingestion.

An OpenTelemetry collector is optional; the switch is able to push directly to a TSDB that supports OTLP ingestion.

The NVIDIA switch exports OTLP metrics, while some TSDBs can only ingest PRW; NVIDIA recommends you use an OpenTelemetry collector to aggregate telemetry data from the switch and export the data to TSDB through PRW.

Follow these key guidelines:

- Switch-to-Collector Communication - Each switch must send telemetry data to a single OpenTelemetry collector instance (sticky assignment). This avoids issues related to out-of-order data processing and ensures consistent metadata association.

- Collector Scalability - You can deploy the OpenTelemetry collector with multiple replicas to handle high telemetry throughput. Configure load balancing to ensure each switch maintains a persistent connection to a single collector instance.

- Deployment Considerations:

- Collector CPU and memory usage might vary depending on scale, setup, and other environment considerations.

- If a backend TSDB causes backpressure, the queue grows on the collector, which increases memory usage. As a guideline, the collector exporter queue size should be 0.

Data Export to TSDB

After the OpenTelemetry collector receives data, you need to push it to a TSDB for storage and analysis using either the OTLP or PRW protocols, depending on the TSDB supported ingestion methods.

Follow these best practices for pushing data to the TSDB:

- (Collector only) Configure the OpenTelemetry collector to batch telemetry data before pushing it to the TSDB to reduce network overhead and improve ingestion performance.

- Protocol selection:

- If the TSDB supports OTLP ingestion, configure the OpenTelemetry collector or switch directly to push data in OTLP format. Using a collector reduces the load as the collector has to do less processing and increases the load on the TSDB that translates OTLP to the proprietary internal protocol.

- If the TSDB only supports PRW, configure the collector accordingly.

- (Collector only) Consider incorporating compression to reduce network bandwidth.

- Use load balancing to ensure that the TSDB can handle multiple write requests efficiently by distributing the load across available instances (see TSDB).

TSDB

For scalable and efficient telemetry storage, NVIDIA recommends you use a high-performance TSDB, such as:

- Thanos is a CNCF, Incubating project. Thanos is a set of components that can be composed into a highly available metric system with unlimited storage capacity, which can be added seamlessly on top of existing Prometheus deployments. OTLP support will be in upcoming releases.

- Mimir from Grafana Labs supports OTLP ingestion.

- VictoriaMetrics is a growing ecosystem and supports OTLP ingestion.

Key Considerations:

- Given the scale of AI data centers and the number of switches, Prometheus might not be able to hold the scale without some deep modifications and optimizations.

- If your telemetry stack is already built around Prometheus, all three databases provide PromQL query language support.

- Mimir and VictoriaMetrics supports OTLP currently. Thanos will support OTLP soon.

- Ensure that your TSDB can handle the expected data ingestion rate and retention policy based on your network size and telemetry volume. The three TSDBs can scale on the receiving side and on the storage side. Refer to the specific documentation to learn about how to scale.

Resource Requirements for VAST Scale

Based on VAST’s scale of 68 switches and increasing in number, and a sampling rate of 15 to 30 seconds, the following approximate resources are required for ingestion using OTLP (PRW typically demands more resources), along with VictoriaMetrics TSDB:

Collector requirements [optional]:

- Number of Collectors: 1-2 OpenTelemetry collectors, each with one CPU and 1GB of RAM.

TSDB requirements (VictoriaMetrics Example):

- VMInsert - Four containers, each with one CPU and 2GB RAM.

- VMStorage - Four containers, each with one CPU and 2GB RAM.

- VMSelect - Query service depends on the nature and number of queries.

Conclusion

Implementing a robust switch telemetry collection system using OpenTelemetry and a scalable TSDB ensures reliable network monitoring and observability. Following these best practices help you optimize performance, reduce operational overhead, and enhance long-term data retention capabilities. For further assistance, reach out to NVIDIA Support or consult the official OpenTelemetry and TSDB documentation.