Set Up Your VMware Virtual Machine for a Remote Server Cluster

First configure the VM on the master node, and then configure the VM on each worker node.

Follow these steps to setup and configure your VM on a cluster of servers in a remote deployment:

-

Verify that your master node meets the VM requirements.

Resource Minimum Requirements Processor Four (4) virtual CPUs Memory 8 GB RAM Local disk storage 64 GB Network interface speed 1 Gb NIC Hypervisor VMware ESXi™ 6.5 or later (OVA image) for servers running Cumulus Linux, CentOS, Ubuntu, and RedHat operating systems -

Confirm that the needed ports are open for communications.

You must open the following ports on your NetQ on-premises servers:

Additionally, for internal cluster communication, you must open these ports:Port or Protocol Number Protocol Component Access 4 IP Protocol Calico networking (IP-in-IP Protocol) 22 TCP SSH 80 TCP Nginx 179 TCP Calico networking (BGP) 443 TCP NetQ UI 2379 TCP etcd datastore 4789 UDP Calico networking (VxLAN) 5000 TCP Docker registry 6443 TCP kube-apiserver 30001 TCP DPU communication 31980 TCP NetQ Agent communication 31982 TCP NetQ Agent SSL communication 32708 TCP API Gateway Port Protocol Component Access 8080 TCP Admin API 5000 TCP Docker registry 6443 TCP Kubernetes API server 10250 TCP kubelet health probe 2379 TCP etcd 2380 TCP etcd 7072 TCP Kafka JMX monitoring 9092 TCP Kafka client 7071 TCP Cassandra JMX monitoring 7000 TCP Cassandra cluster communication 9042 TCP Cassandra client 7073 TCP Zookeeper JMX monitoring 2888 TCP Zookeeper cluster communication 3888 TCP Zookeeper cluster communication 2181 TCP Zookeeper client 36443 TCP Kubernetes control plane -

Download the NetQ Platform image.

- On the NVIDIA Application Hub, log in to your account.

- Select NVIDIA Licensing Portal.

- Select Software Downloads from the menu.

- Click Product Family and select NetQ.

- Locate the NetQ SW 4.2 VMWare Cloud image and select Download.

- If prompted, agree to the license agreement and proceed with the download.

For enterprise customers, if you do not see a link to the NVIDIA Licensing Portal on the NVIDIA Application Hub, contact NVIDIA support.

For NVIDIA employees, download NetQ directly from the NVIDIA Licensing Portal.

- On the NVIDIA Application Hub, log in to your account.

-

Setup and configure your VM.

VMware Example Configuration

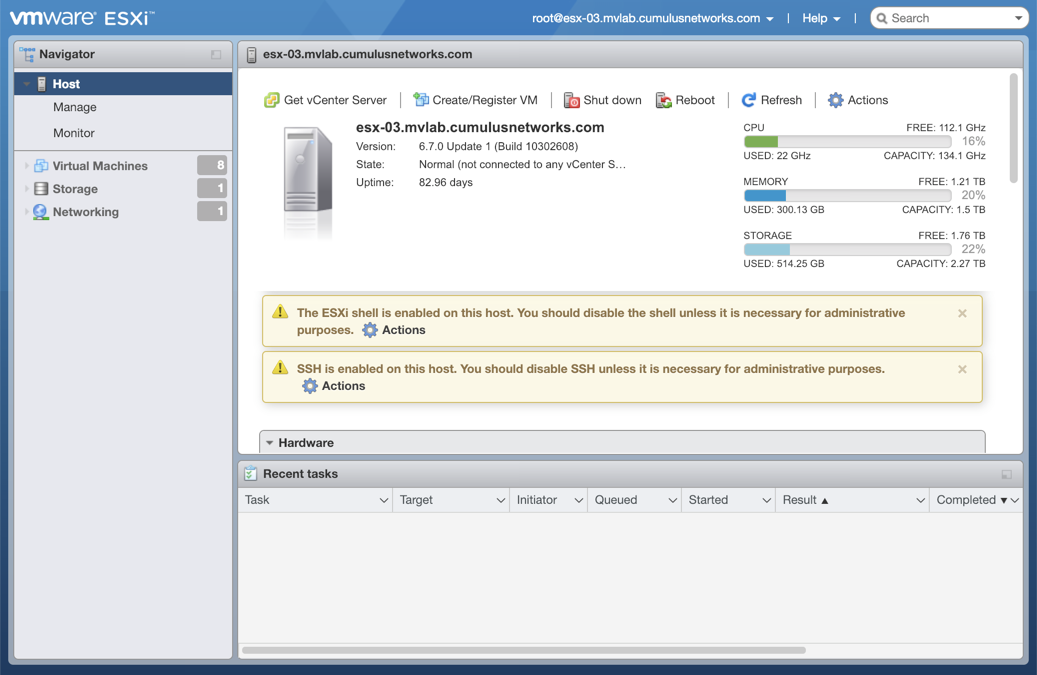

This example shows the VM setup process using an OVA file with VMware ESXi.Enter the address of the hardware in your browser.

Log in to VMware using credentials with root access.

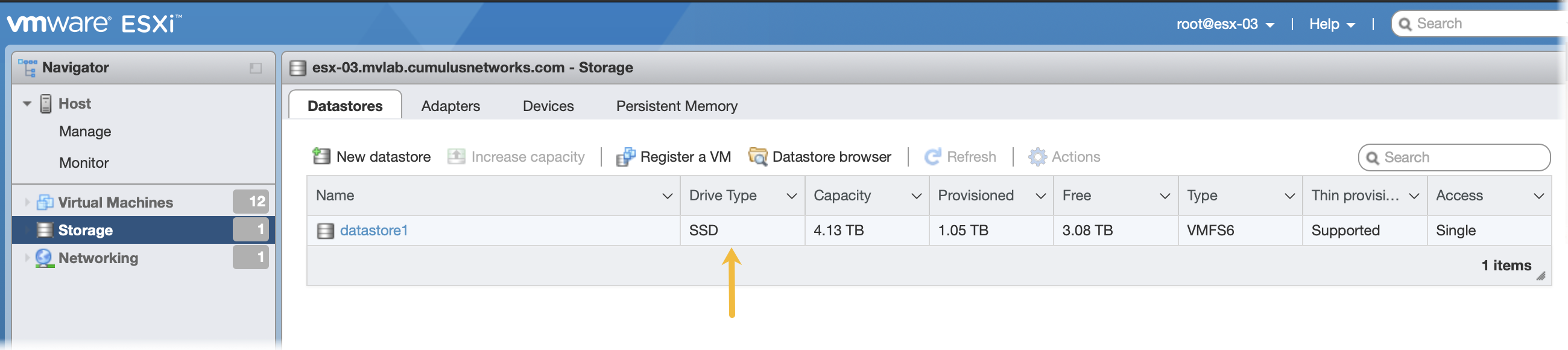

Click Storage in the Navigator to verify you have an SSD installed.

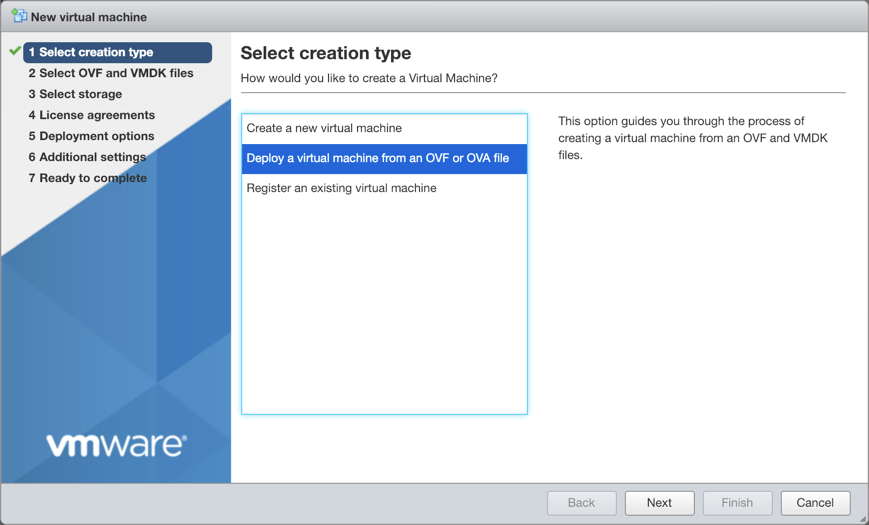

Click Create/Register VM at the top of the right pane.

Select Deploy a virtual machine from an OVF or OVA file, and click Next.

Provide a name for the VM, for example NetQ.

Tip: Make note of the name used during install as this is needed in a later step.

Drag the NetQ image file you downloaded from the NVIDIA Application Hub to the installation wizard, then click Next.

Select the storage type and data store for the image, then click Next.

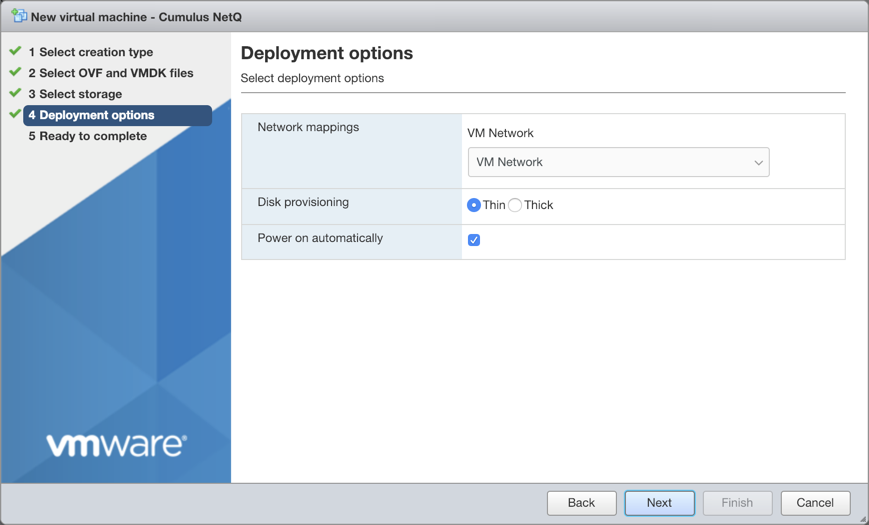

Accept the default deployment options or modify them according to your network needs. Click Next when you are finished.

Review the configuration summary. Click Back to change any of the settings, or click Finish to continue with the creation of the VM.

The progress of the request is shown in the Recent Tasks window at the bottom of the application. This may take some time. After the VM is deployed, the wizard displays the full hardware and configuration details.

-

Log in to the VM and change the password.

Use the default credentials to log in the first time:

- Username: cumulus

- Password: cumulus

$ ssh cumulus@<ipaddr> Warning: Permanently added '<ipaddr>' (ECDSA) to the list of known hosts. Ubuntu 22.04 LTS cumulus@<ipaddr>'s password: You are required to change your password immediately (root enforced) System information as of Thu Dec 3 21:35:42 UTC 2024 System load: 0.09 Processes: 120 Usage of /: 8.1% of 61.86GB Users logged in: 0 Memory usage: 5% IP address for eth0: <ipaddr> Swap usage: 0% WARNING: Your password has expired. You must change your password now and login again! Changing password for cumulus. (current) UNIX password: cumulus Enter new UNIX password: Retype new UNIX password: passwd: password updated successfully Connection to <ipaddr> closed.

Log in again with your new password.

$ ssh cumulus@<ipaddr> Warning: Permanently added '<ipaddr>' (ECDSA) to the list of known hosts. Ubuntu 22.04 LTS cumulus@<ipaddr>'s password: System information as of Thu Dec 3 21:35:59 UTC 2024 System load: 0.07 Processes: 121 Usage of /: 8.1% of 61.86GB Users logged in: 0 Memory usage: 5% IP address for eth0: <ipaddr> Swap usage: 0% Last login: Thu Dec 3 21:35:43 2024 from <local-ipaddr> cumulus@ubuntu:~$

-

Verify the master node is ready for installation. Fix any errors indicated before installing the NetQ software.

cumulus@hostname:~$ sudo opta-check-cloud

-

Change the hostname for the VM from the default value.

The default hostname for the NetQ Virtual Machines is ubuntu. Change the hostname to fit your naming conventions while meeting Internet and Kubernetes naming standards.

Kubernetes requires that hostnames are composed of a sequence of labels concatenated with dots. For example, “en.wikipedia.org” is a hostname. Each label must be from 1 to 63 characters long. The entire hostname, including the delimiting dots, has a maximum of 253 ASCII characters.

The Internet standards (RFCs) for protocols specify that labels may contain only the ASCII letters a through z (in lower case), the digits 0 through 9, and the hyphen-minus character ('-').

Use the following command:

cumulus@hostname:~$ sudo hostnamectl set-hostname NEW_HOSTNAME

Add the same NEW_HOSTNAME value to /etc/hosts on your VM for the localhost entry. Example:

127.0.0.1 localhost NEW_HOSTNAME

-

Run the Bootstrap CLI. Be sure to replace the eth0 interface used in this example with the interface on the server used to listen for NetQ Agents.

cumulus@

:~$ netq bootstrap master interface eth0 tarball /mnt/installables/netq-bootstrap-4.0.0.tgz Allow about five to ten minutes for this to complete, and only then continue to the next step.

If this step fails for any reason, you can run

netq bootstrap resetand then try again.If you have changed the IP address or hostname of the NetQ Cloud VM after this step, you need to re-register this address with NetQ as follows:

Reset the VM.

cumulus@hostname:~$ netq bootstrap reset

Re-run the Bootstrap CLI. This example uses interface eth0. Replace this with your updated IP address, hostname or interface using the

interfaceorip-addroption.cumulus@

:~$ netq bootstrap master interface eth0 tarball /mnt/installables/netq-bootstrap-4.0.0.tgz -

Consider the following for container environments, and make adjustments as needed.

Flannel Virtual NetworksIf you are using Flannel with a container environment on your network, you may need to change its default IP address ranges if they conflict with other addresses on your network. This can only be done one time during the first installation.

The address range is 10.244.0.0/16. NetQ overrides the original Flannel default, which is 10.1.0.0/16.

To change the default address range, use the CLI with the

pod-ip-rangeoption. For example:cumulus@hostname:~$ netq bootstrap master interface eth0 tarball /mnt/installables/netq-bootstrap-4.0.0.tgz pod-ip-range 10.255.0.0/16

Docker Default Bridge InterfaceThe default Docker bridge interface is disabled in NetQ. If you need to reenable the interface, contact support.

-

Verify that your first worker node meets the VM requirements, as described in Step 1.

-

Confirm that the needed ports are open for communications, as described in Step 2.

-

Open your hypervisor and set up the VM in the same manner as for the master node.

Make a note of the private IP address you assign to the worker node. You need it for later installation steps.

-

Verify the worker node is ready for installation. Fix any errors indicated before installing the NetQ software.

cumulus@hostname:~$ sudo opta-check-cloud

-

Repeat Steps 10 through 13 for each additional worker node you want in your cluster.

The final step is to install and activate the NetQ software using the CLI: